CrossSpider: Difference between revisions

No edit summary |

|||

| (21 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

CrossSpider is web frontend for | CrossSpider is web frontend for DX cluster software. Some DX clusters used Java applet client. But this technology is little obsolete yet. So I wrote this small frontend for [http://ok0dxi.nagano.cz OK0DXI]. The name CrossSpider links to DX Spider which is used here. | ||

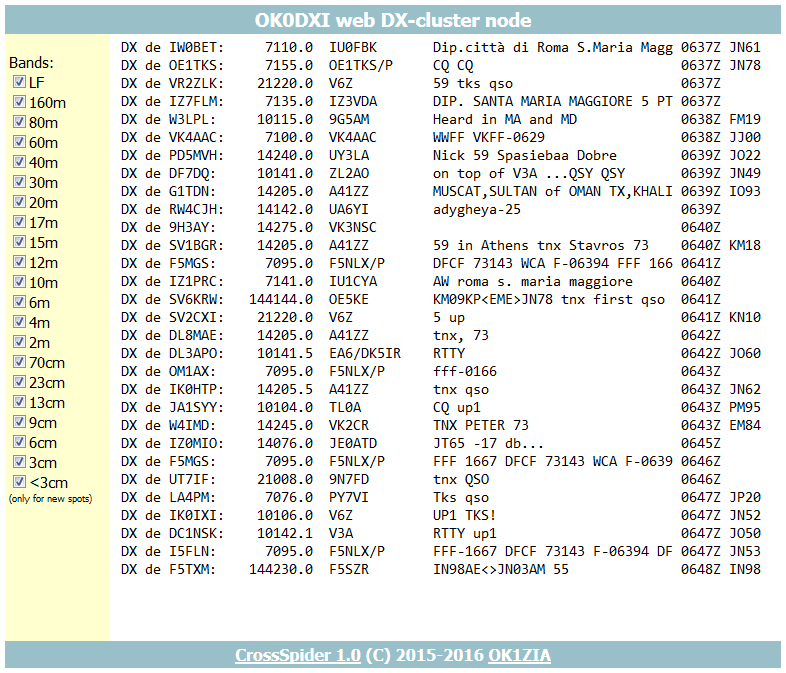

[[Image:CrossSpider.png|thumb|none|786px|The CrossSpider in web browser]] | |||

=Limitations= | =Limitations= | ||

| Line 7: | Line 9: | ||

=How it works= | =How it works= | ||

On the DX cluster computer, small Perl daemon "robot.pl" is running. It is connected to DX cluster using telnet (exactly using netcat program) | On the DX cluster computer, small Perl daemon "robot.pl" is running. It is connected to DX cluster using telnet (exactly using netcat program). | ||

The daemon inserts all spots and other messages to PostgreSQL database. | The daemon inserts all spots and other messages to PostgreSQL database. | ||

| Line 21: | Line 23: | ||

First set up the PostgreSQL database. In this text database name is crossspider, user is robot: | First set up the PostgreSQL database. In this text database name is crossspider, user is robot: | ||

# apt-get install libdbd-pg-perl postgresql postgresql-client | |||

# su - postgres | # su - postgres | ||

$ createdb crossspider | $ createdb crossspider | ||

$ psql crossspider | $ psql crossspider | ||

crossspider=# create table lines(id serial, stamp timestamp with time zone, call text, qrg numeric(10,1), spot text); | crossspider=# create table lines(id serial, stamp timestamp with time zone, call text, qrg numeric(10,1), spot text); | ||

crossspider=# create user robot with password '01001' | crossspider=# create index lines_id_idx on lines(id); | ||

crossspider=# create user robot with password '01001'; | |||

crossspider=# grant all on lines TO robot; | crossspider=# grant all on lines TO robot; | ||

crossspider=# grant all on lines_id_seq TO robot; | crossspider=# grant all on lines_id_seq TO robot; | ||

crossspider=# \q | |||

$ exit | |||

# | |||

Allow access for user robot. Edit /etc/postgres/*/pg_hba.conf and add this line: | Allow access for user robot. Edit /etc/postgres/*/pg_hba.conf and add this line: | ||

# TYPE | # TYPE DATABASE USER ADDRESS METHOD | ||

local crossspider robot password | |||

==Robot== | ==Robot== | ||

''Robot is reference to [http://www.gooddx.net/ PE1NWL's DX robot].'' | |||

Of course you have to install Perl first. But it is probaly already part of your distribution. Copy robot.pl somewhere (/usr/local/sbin). | Of course you have to install Perl first. But it is probaly already part of your distribution. Copy robot.pl somewhere (/usr/local/sbin). | ||

| Line 43: | Line 50: | ||

[Unit] | [Unit] | ||

Description=DX-robot daemon | Description=DX-robot daemon | ||

Requires=spider.service # depends on how you | Requires=postgresql.service spider.service # depends on how you startb the DX spider | ||

After=network.target spider.service | After=network.target postgresql.service spider.service | ||

[Service] | [Service] | ||

| Line 60: | Line 67: | ||

[Install] | [Install] | ||

WantedBy=multi-user.target | WantedBy=multi-user.target | ||

Now set up the service: | |||

# systemctl daemon-reload | |||

# systemctl enable robot.service | |||

If you use older distro, you can use traditional /etc/rc.local or something: | If you use older distro, you can use traditional /etc/rc.local or something: | ||

# /etc/rc.local | # /etc/rc.local | ||

nohup /usr/bin/perl /usr/local/sbin/robot.pl & | nohup /usr/bin/perl /usr/local/sbin/robot.pl & | ||

Edit the robot.pl file and modify head part to set access to database and DX cluster. Of course you have to enable access also on your DX Cluster software. | |||

==Web server== | |||

If you do not have any web server with php support and want to use Apache, run: | |||

# apt-get install apache2 libapache2-mod-php5 php5-pgsql | |||

==Web page== | |||

Download all the *.txt files, copy they to your website and rename to *.php. (Files on server cannot be PHP because otherwise you get output of PHP scripts, not source code). Edit first part of ajax.php and set up access to database. | |||

=Next development= | |||

I'm not going to develop new complex features. At least not the per-user access to DX cluster. If you want new feature, best way is to write it and send me path or affected files. | |||

73 Lada, OK1ZIA | |||

Latest revision as of 14:17, 25 September 2017

CrossSpider is web frontend for DX cluster software. Some DX clusters used Java applet client. But this technology is little obsolete yet. So I wrote this small frontend for OK0DXI. The name CrossSpider links to DX Spider which is used here.

Limitations

- CrossSpider can be used only for read-only access to DX cluster. It does not handle user connection. It is connected as special user which have no filters set.

- Band filtering is done on client so all spots are transferred via network.

How it works

On the DX cluster computer, small Perl daemon "robot.pl" is running. It is connected to DX cluster using telnet (exactly using netcat program).

The daemon inserts all spots and other messages to PostgreSQL database.

Next part is web page which uses HTML5/Ajax to access latest spots from PostgreSQL.

Download

http://ok1zia.nagano.cz/crossspider

Install

Database

First set up the PostgreSQL database. In this text database name is crossspider, user is robot:

# apt-get install libdbd-pg-perl postgresql postgresql-client # su - postgres $ createdb crossspider $ psql crossspider crossspider=# create table lines(id serial, stamp timestamp with time zone, call text, qrg numeric(10,1), spot text); crossspider=# create index lines_id_idx on lines(id); crossspider=# create user robot with password '01001'; crossspider=# grant all on lines TO robot; crossspider=# grant all on lines_id_seq TO robot; crossspider=# \q $ exit #

Allow access for user robot. Edit /etc/postgres/*/pg_hba.conf and add this line:

# TYPE DATABASE USER ADDRESS METHOD local crossspider robot password

Robot

Robot is reference to PE1NWL's DX robot.

Of course you have to install Perl first. But it is probaly already part of your distribution. Copy robot.pl somewhere (/usr/local/sbin).

If you use current linux distribution, you probably use systemd for service maintain. It's config directory is /etc/systemd/system. Here create file named robot.service:

[Unit] Description=DX-robot daemon Requires=postgresql.service spider.service # depends on how you startb the DX spider After=network.target postgresql.service spider.service [Service] Type=simple User=nobody StandardOutput=journal StandardError=journal Restart=always RestartSec=3 ExecStart=/usr/bin/perl /usr/local/sbin/robot.pl RestartSec=10 Restart=on-failure [Install] WantedBy=multi-user.target

Now set up the service:

# systemctl daemon-reload # systemctl enable robot.service

If you use older distro, you can use traditional /etc/rc.local or something:

# /etc/rc.local nohup /usr/bin/perl /usr/local/sbin/robot.pl &

Edit the robot.pl file and modify head part to set access to database and DX cluster. Of course you have to enable access also on your DX Cluster software.

Web server

If you do not have any web server with php support and want to use Apache, run:

# apt-get install apache2 libapache2-mod-php5 php5-pgsql

Web page

Download all the *.txt files, copy they to your website and rename to *.php. (Files on server cannot be PHP because otherwise you get output of PHP scripts, not source code). Edit first part of ajax.php and set up access to database.

Next development

I'm not going to develop new complex features. At least not the per-user access to DX cluster. If you want new feature, best way is to write it and send me path or affected files.

73 Lada, OK1ZIA